Cross-sensor iris recognition using adversarial strategy and sensor-specific information

Cross-sensor iris recognition using adversarial strategy and sensor-specific information

Cross-sensor iris recognition using adversarial strategy and sensor-specific information

Abstract

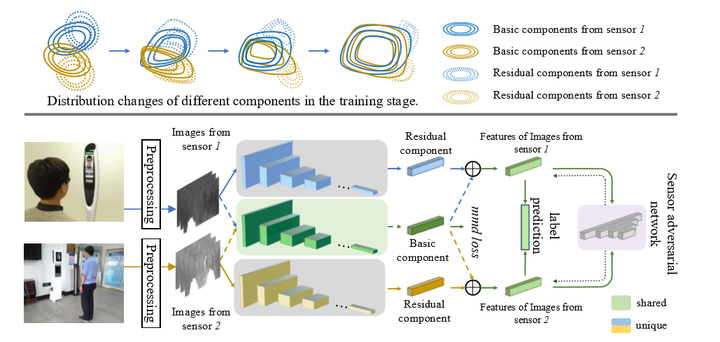

Due to the growing demand of iris biometrics, lots of new sensors are being developed for high-quality image acquisition. However, upgrading the sensor and re-enrolling for users is expensive and time-consuming. This leads to a dilemma where enrolling on one type of sensor but recognizing on the others. For this cross-sensor matching, the large gap between distributions of enrolling and recognizing images usually results in degradation in recognition performance. To alleviate this degradation, we propose Cross-sensor iris network (CSIN) by applying the adversarial strategy and weakening interference of sensor-specific information. Specifically, there are three valuable efforts towards learning discriminative iris features. Firstly, the proposed CSIN adds extra feature extractors to generate residual components containing sensor-specific information and then utilizes these components to narrow the distribution gap. Secondly, an adversarial strategy is borrowed from Generative Adversarial Networks to align feature distributions and further reduce the discrepancy of images caused by sensors. Finally, we extend triplet loss and propose instance-anchor loss to pull the instances of the same class together and push away from others. It is worth mentioning that the proposed method doesn’t need pair-same data or triplet, which reduced the cost of data preparation. Experiments on two real-world datasets validate the effectiveness of the proposed method in cross-sensor iris recognition.